Alias Robotics introduces alias0, a Privacy-First Cybersecurity AI alongside with CAI, the de facto open source scaffolding for building AI Security

Alias Robotics introduces alias0, a Privacy-First Cybersecurity AI alongside with CAI, the de facto open source scaffolding for building AI Security

alias0, available via CAIv0.4.0, delivers a privacy-by-design architectureand a powerful model-of-models intelligence, outperforming competitorsand ready for enterprise & government deployment.

Víctor Mayoral-Vilches, lead researcher at Alias Robotics introduces CAIv0.4 and alias0 model-of-models intelligence

Cybersecurity AI (CAI) |

source code (technical report) |

| Cybersecurity AI Community Meeting 2 | Friday, June 20 3:30 PM CEST (link) |

Why we built alias0

The GenAI boom alongside the spectacular rise of LLM-powered “security copilots” and AI Security has a hidden cost: nearly 100 % of commercial offerings proxy user prompts to US- or China-based foundation models, effectively gifting away customers’ sensitive data and security know-how. That means:

- Loss of data sovereignty – every artifact (API keys, passwords, hashes, IPs, source snippets, even org charts) becomes training fodder for someone else’s model.

- Opaque supply-chains – users inherit the entire external inference stack (telemetry, logging, third-party subcontractors) as an implicit dependency.

- Regulatory friction – GDPR, NIS2, the AI Act, and upcoming eIDAS 2 create explicit liabilities for uncontrolled data transfers.

European companies and governments need an alternative that keeps data sovereign, private, and under their direct control — without trading off capability. Europe needs data ownership and an on-prem-capable, regulation-aware, and adversarially strong Cybersecurity AI and related LLM alternatives.

alias0 delivers exactly that:

| Value Proposition | Technical Manifestation | Impact |

|---|---|---|

| Full autonomy | End-to-end exploit synthesis, patch generation, CTI summarisation | ↓ MTTR, ↓ analyst fatigue |

| Zero private data egress | All data can remain on-prem; nothing is sent to external LLM APIs. | Meets data-residency and export-control rules |

| European roots | R&D, hosting, and support based in the EU; supply-chain attestation available | Simplifies compliance audits (GDPR, ENISA CSP-cert, etc.) |

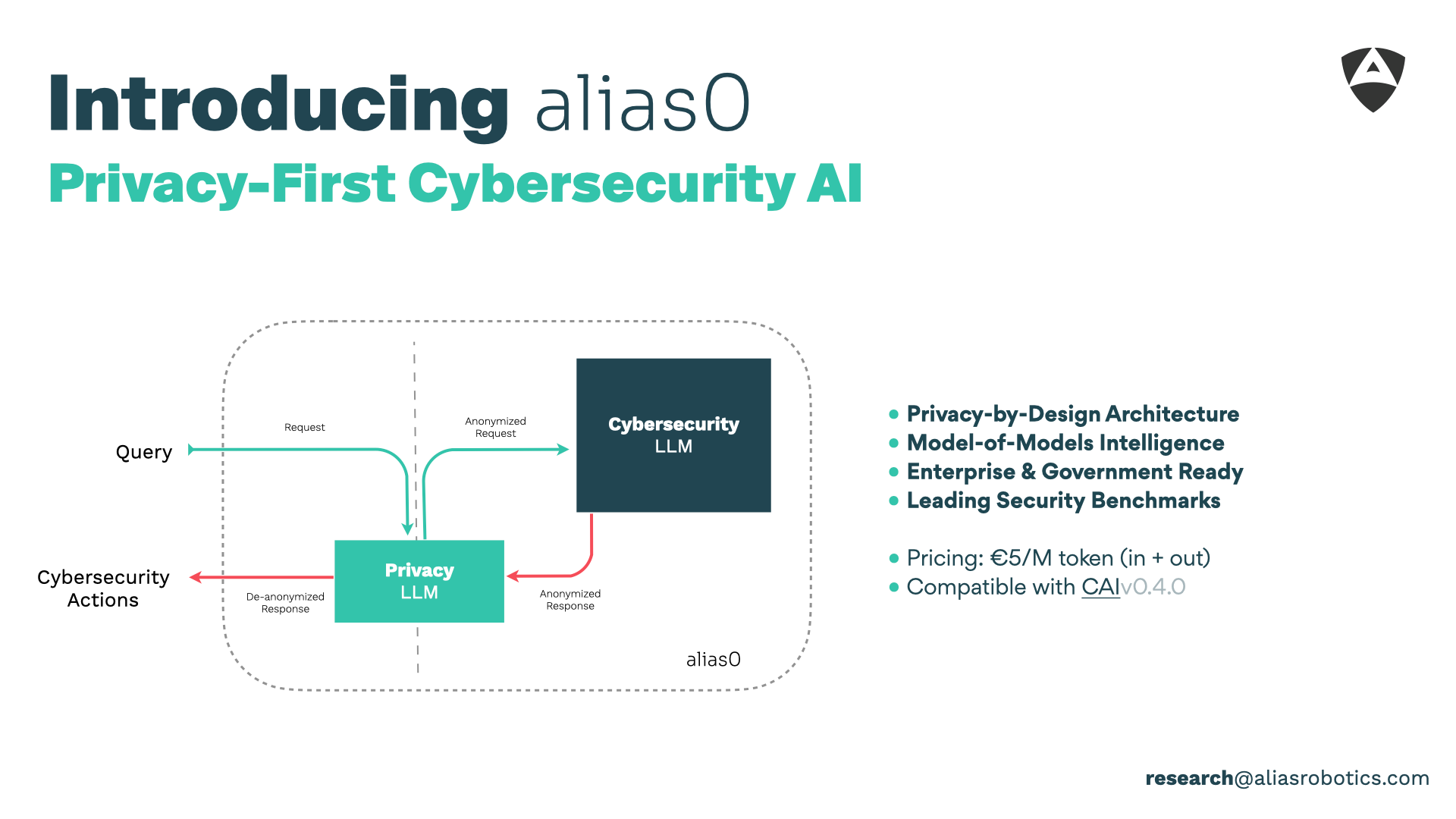

Privacy-by-Design: We can’t lose what we never know

alias0 internalises the privacy budget concept from differential-privacy literature and couples it with a dedicated PrivacyLLM that anonymises every request before it reaches the security brain and re-identifies only the minimal context required for the answer:

┌──────────┐ ┌────────────┐ ┌──────────────────┐

│ User │ Q_raw │ PrivacyLLM │ Q_token │ CybersecurityLLM │ A_action

│ Query ├────────► Anonymise ├─────────► (reason) ├───────┐

└──────────┘ └────────────┘ └──────────────────┘ │

▼

┌──────────────┐

│ PrivacyLLM │

│ Re-identify │

└──────┬───────┘

│

▼

┌──────────────────┐

│ Cybersecurity │

│ action │

└──────────────────┘

Q_raw– Raw Prompt: Contains unrestricted context (logs, PCAPs, firmware, etc.).Q_token– Policy-Clean Prompt: Output of PrivacyLLM’s anonymiser; structurally identical but semantically neutralised.A_action– Cyber-Security Action: CybersecurityLLM emits directly executable artefactsRe-identification: PrivacyLLM subsystem performs a “token unwrap”, reinserting redacted data only where essential to lead to Cybersecurity actions.

Because raw customer data never leaves the sandbox, risk of leakage is mathematically minimise, leading to:

-

Anonymisation Every identifier (asset tag, IP range, user handle) is replaced by a deterministic but non-reversible token using format-preserving encryption.

-

Policy enforcement – The PrivacyLLM embeds DLP patterns, GDPR art. 6/32 rules, and org-specific regex policies so prohibited content is blocked before inference.

-

Mathematical assurance – Because the CybersecurityLLM never receives raw secrets, exfiltration probability ≈ 0 under the honest-but-curious threat model.

Model-of-Models Intelligence — Two Brains, One Mission

Traditional “one-size-fits-all” LLMs dilute capacity across many unrelated domains. alias0 instead follows a Model-of-Models (MoM) pattern, where specialised sub-systems each master a coherent slice of the problem space and collaborate via a tightly defined interface.

alias0 embodies the Model-of-Models philosophy: instead of one monolithic model trying to do everything, we combine multiple, purpose-built intelligences that collaborate seamlessly.

Today that collaboration happens between two large-language-model blocks:

| Model Block | Core Mission | What It Knows |

|---|---|---|

| PrivacyLLM | Strip or substitute personally-identifiable data, enforce organisational policy, and certify that only abstracted context proceeds downstream. | Taxonomies of PII, privacy regulations, data-loss prevention patterns, organisational policy rules. |

| CybersecurityLLM | Generate, validate, and execute security reasoning and artefacts (e.g., exploits, hardening scripts, threat-intel briefs). | Offensive tradecraft, defensive best practices, CTI knowledge graphs, vulnerability corpora, protocol RFCs. |

alias0 is only our first step. The same architectural skeleton can host many more specialised intelligences and models which are working on to address more use ases. Each will plug into the existing privacy envelope, forming an orchestrated constellation of narrow experts that, together, outperform any single giant model. This is what we mean by Model-of-Models — a scientific and engineering strategy.

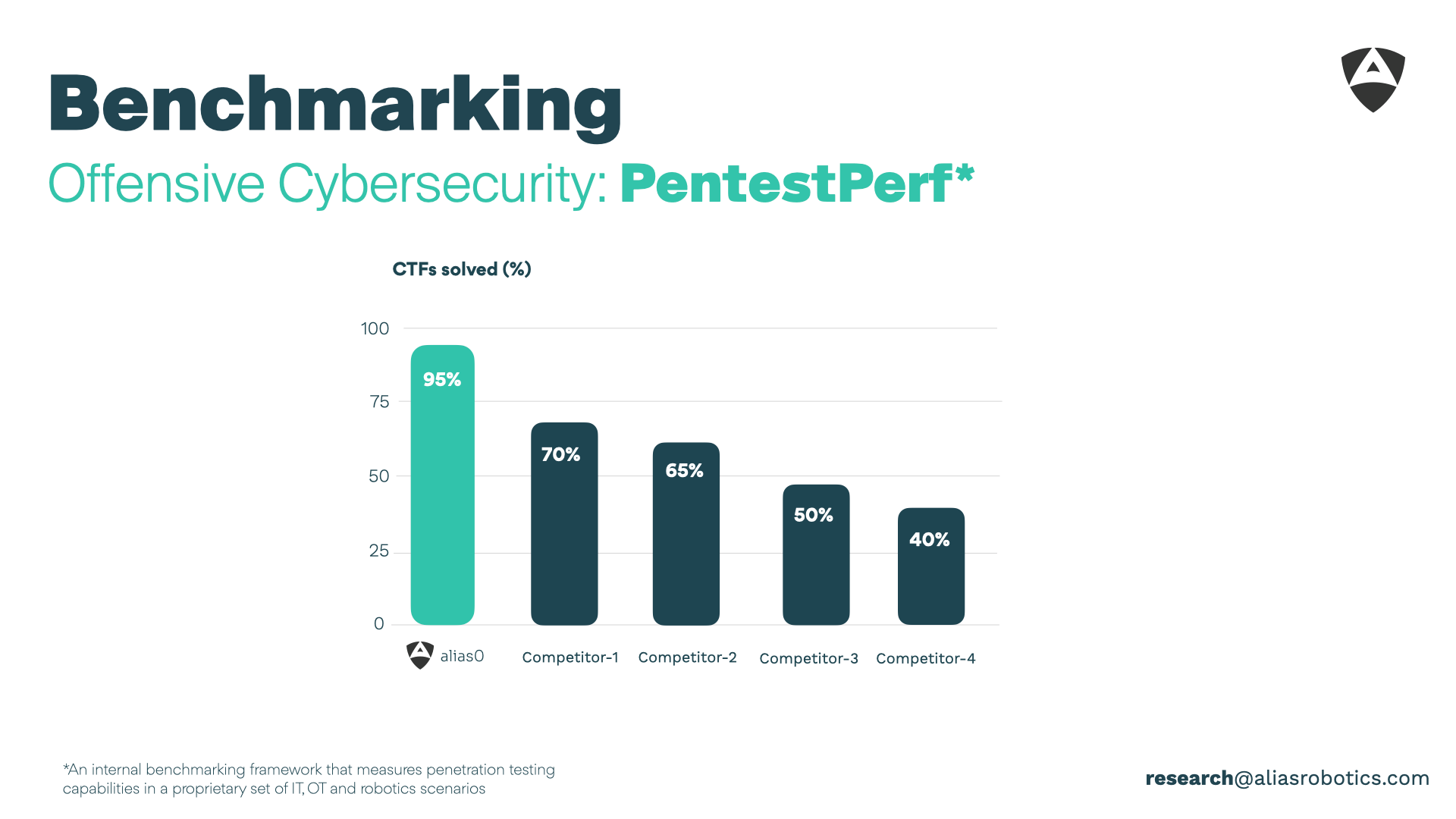

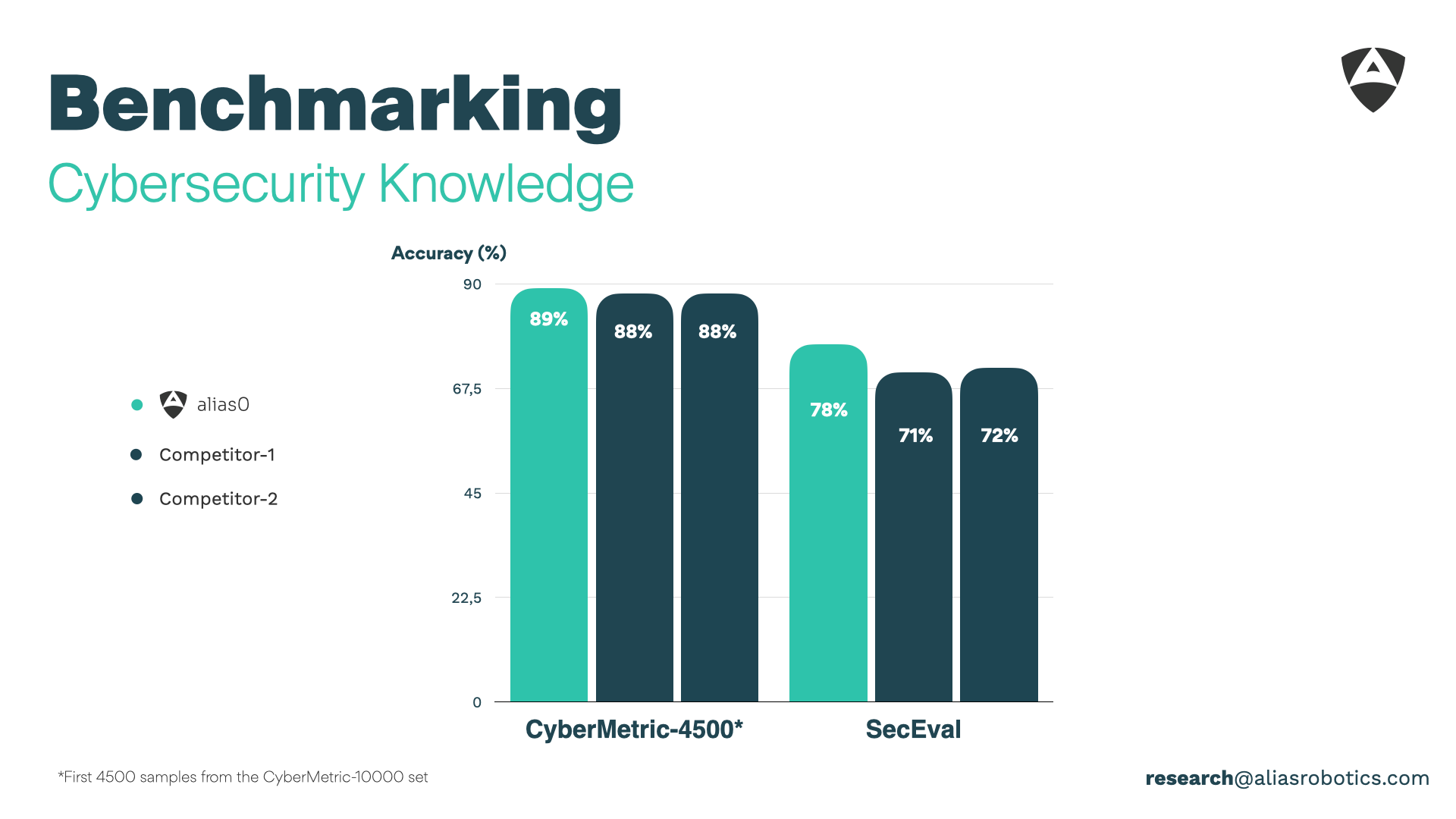

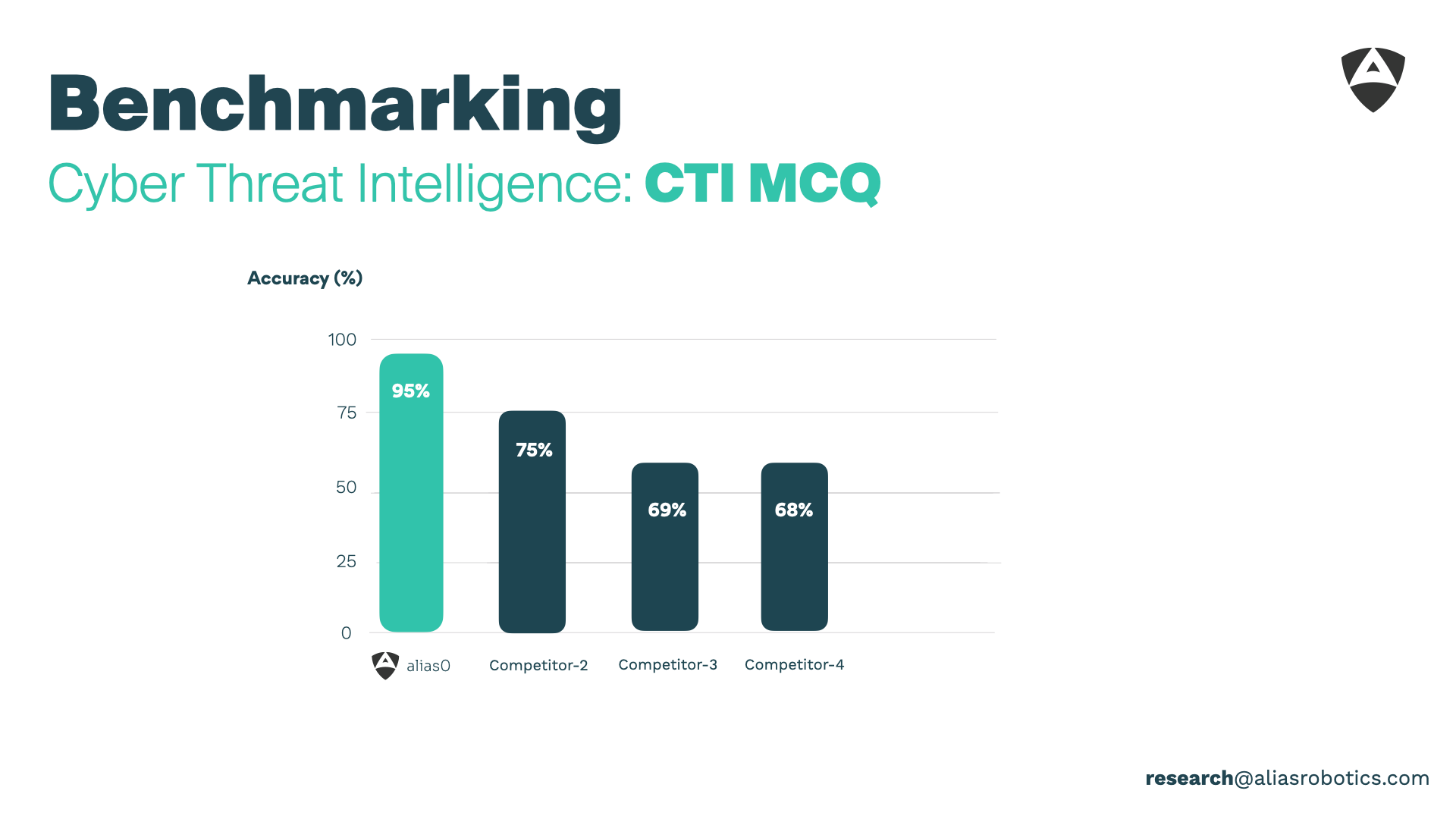

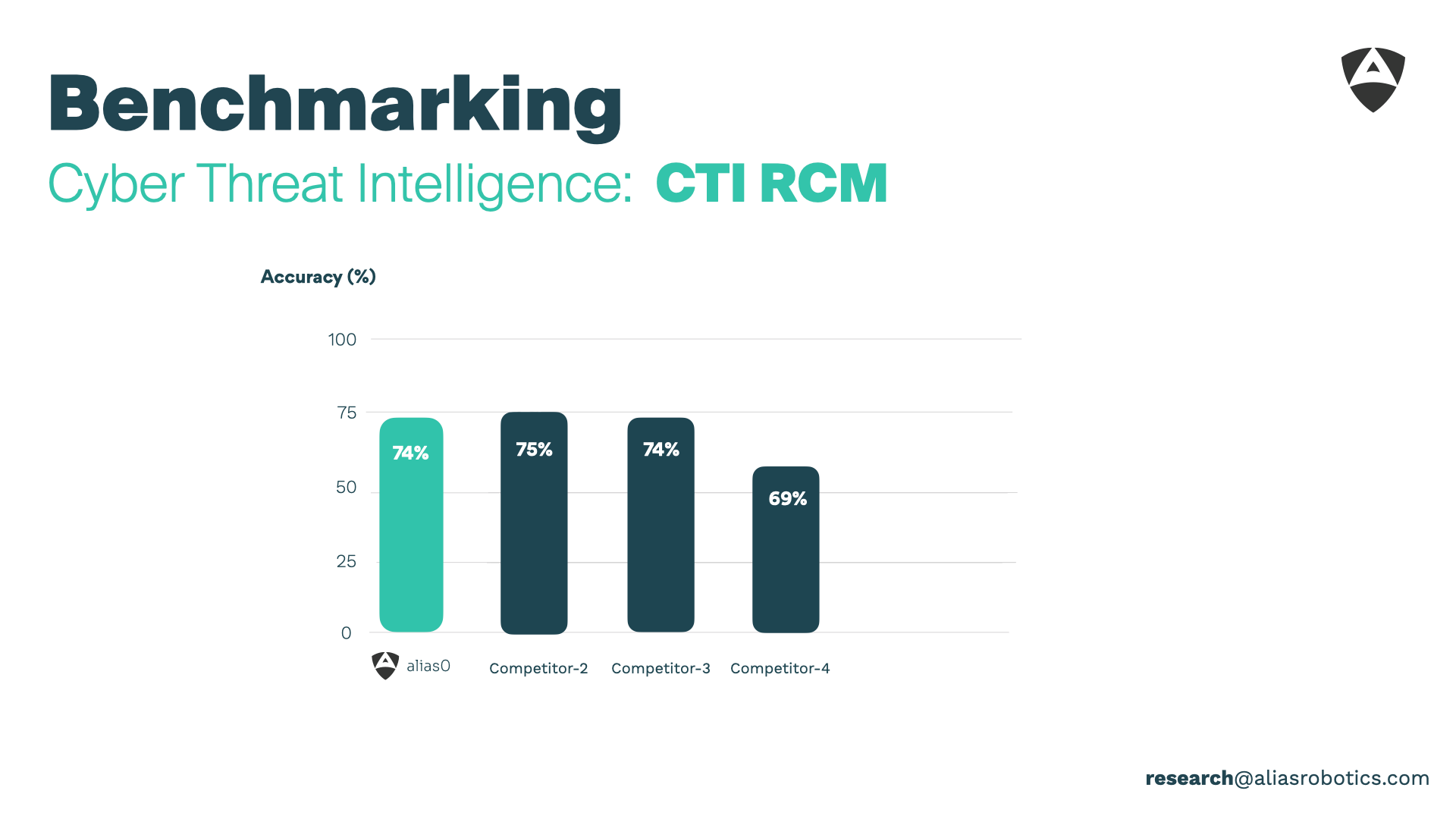

Breaking the Benchmark Ceiling

| Benchmark | Metric | alias0 | Best Runner-Up |

|---|---|---|---|

| PentestPerf[1] | Offensive Cybersecurity | 95 % | 70 % |

| SecEval | Cybersecurity Knowledge | 78 % | 72 % |

| CTI-MCQ | Cyber Threat Intelligence | 95 % | 75 % |

alias0 isn’t just incrementally better — it is ahead in the field of cybersecurity.

An internal benchmarking framework that measures penetration testing capabilities in a proprietary set of IT, OT and robotics scenarios ↩︎

Enterprise & Government Ready

- Modular deployment — run with all data on our managed cloud, your private cloud, or air-gapped on-prem.

- Compliance hooks — audit logging, NIS2 aligned controls and GDPR conscious.

- From Europe, to the world — Need formal assurances? Our enterprise SLA includes 24 × 7 support. Directly from your time-zone.

Transparent Pricing

| Plan | Price |

|---|---|

| Enterprise & Goverment | €5 / million tokens (in + out) |

| National Security | Custom, reach out |

Reach out to engage.

Get Started in 30 Seconds With CAI

# Install CAIv0.4.0

pip install cai-framework==0.4.0

# Set up alias0

echo -e 'ALIAS_API_KEY="sk-dfse23rwfdfdsfwefdsv"\nCAI_MODEL=alias0\nCAI_AGENT_TYPE=bug_bounter_agent' > .env

# Start vibe-hacking your way into security

CAI> Can you find vulnerabilities in my ******* and help mitigate them?